Every few decades, a technology standard emerges that seems boring on the surface but transforms everything it touches. USB replaced a chaos of proprietary connectors with a universal interface. HTTP made it possible for any computer to talk to any web server. TCP/IP created the language that allowed the internet to exist. In 2025, a new standard called the Model Context Protocol, or MCP, is positioning itself to play a similar role for artificial intelligence.

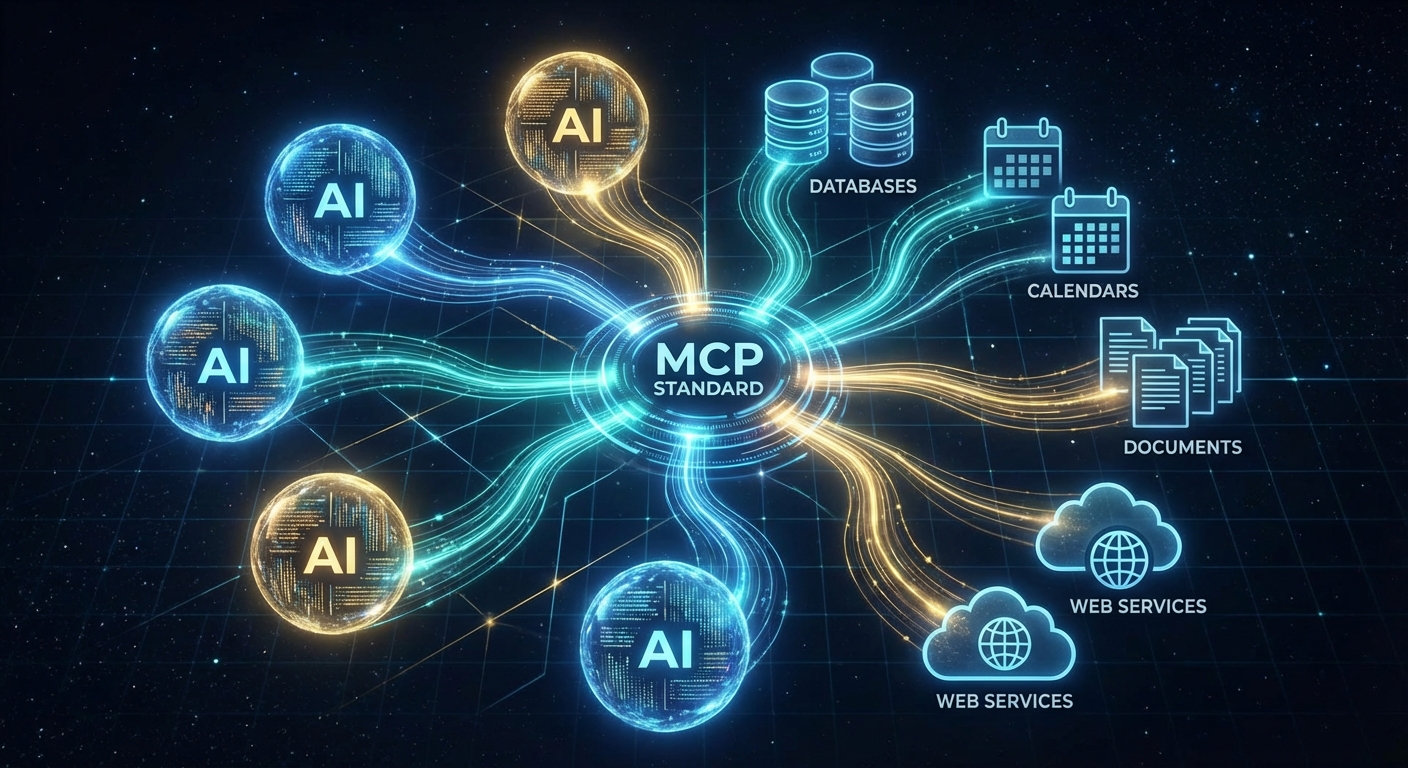

The comparison that has stuck is “USB-C for AI.” Like USB-C, which lets you connect any device to any computer without worrying about compatibility, MCP lets any AI model connect to any external data source or tool without custom integration work. It sounds like a technical detail, the kind of thing only developers would care about. But the implications are profound for how AI systems will work, what they will be able to do, and who will control them.

The Problem MCP Solves

To understand why MCP matters, you need to understand a fundamental limitation of current AI systems. Large language models like GPT and Claude are trained on massive datasets, but that training has a cutoff date. They cannot access new information unless someone updates their training. More importantly, they cannot access private data, your emails, your calendar, your company’s internal documents, unless someone builds a specific connection.

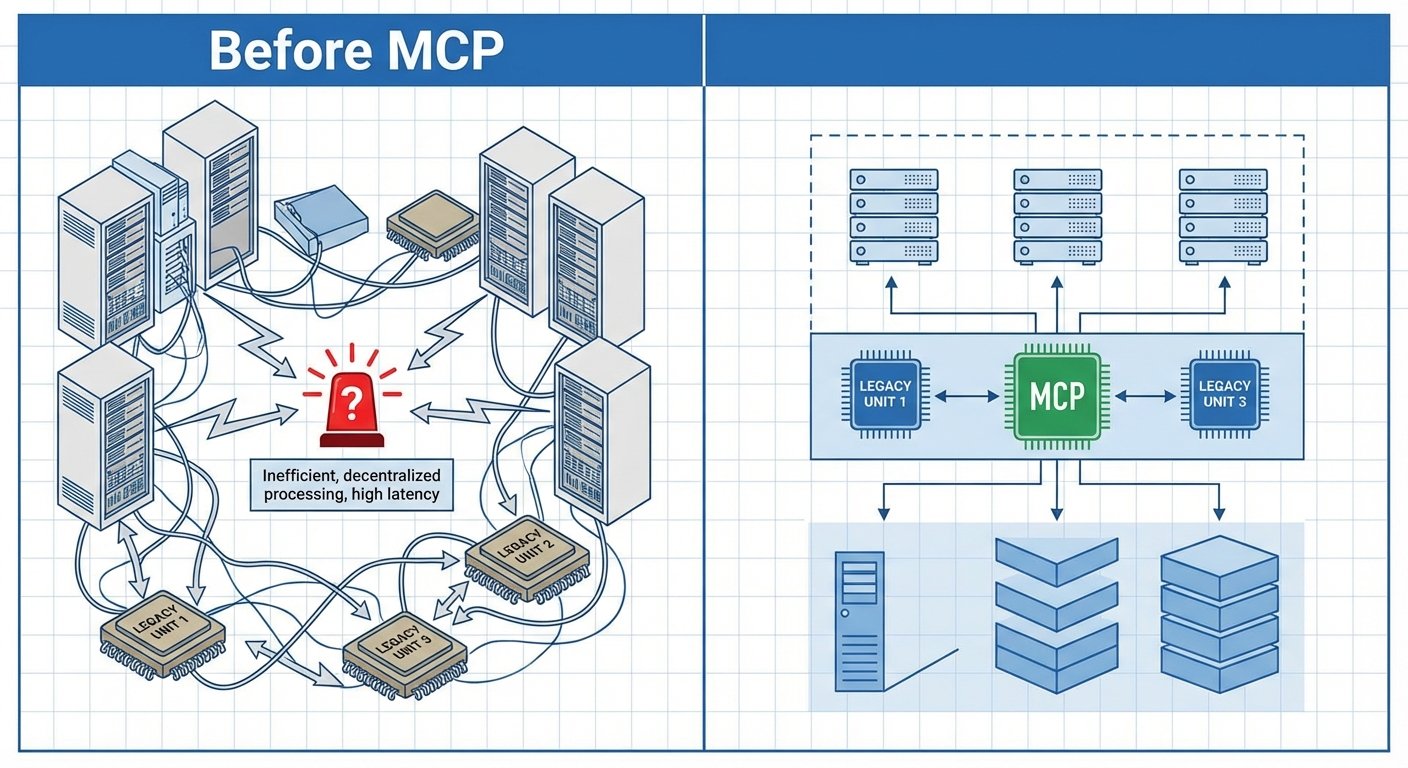

This creates what engineers call the “many-to-many problem.” There are dozens of AI models from different companies. There are thousands of tools and data sources those models might need to access. If each model needs a custom integration for each tool, you end up with an exponentially growing number of integrations. It becomes unmanageable.

Previous solutions involved building “plugins” or “agents” for specific AI platforms. ChatGPT has plugins; Claude has tools. But these are platform-specific. A plugin built for ChatGPT does not work with Claude or Gemini. Developers who want to make their tools available to AI users must build separate integrations for each platform, multiplying their workload and limiting adoption.

How MCP Works

MCP provides a standardized way for AI models to request information and take actions through external systems. Instead of each model needing its own API integration, developers can build a single MCP server that any compatible AI model can use. The model sends a request in MCP format; the server translates that into whatever actions are needed; results flow back through the same channel.

The protocol handles the messy details that make integrations difficult: authentication, error handling, rate limiting, and formatting. A developer who builds an MCP server for, say, a calendar application does not need to worry about how different AI models will access it. They build once, and any MCP-compatible AI can use it.

From the AI model’s perspective, MCP provides a consistent interface to the external world. The model does not need to know whether it is accessing a calendar, a database, a web API, or a filesystem. It sends MCP requests and receives MCP responses. This abstraction layer makes models more flexible and reduces the complexity of building AI-powered applications.

The Ecosystem Taking Shape

Since MCP emerged in late 2024, adoption has accelerated rapidly. Major AI companies have added MCP support to their models. Tool developers have created MCP servers for popular services. An open-source ecosystem has sprung up, with developers sharing implementations and building on each other’s work.

The effect is a kind of network effect familiar from previous platform standards. As more models support MCP, developers have more incentive to build MCP servers. As more servers become available, models that support MCP become more capable. This virtuous cycle can rapidly establish a standard as the default choice, even in competitive markets.

Google’s announcement in April 2025 of the Agent-to-Agent protocol (A2A) adds another layer. While MCP connects models to tools, A2A connects models to each other, allowing AI systems to collaborate on complex tasks. Together, these protocols are creating an infrastructure layer for AI that does not belong to any single company.

Why Standards Matter More Than They Seem

The history of technology is largely a history of standards. Standards are not glamorous, but they determine who wins and who loses, what becomes possible and what remains impractical. The company or community that controls a key standard often controls the trajectory of an entire industry.

Consider the web. Tim Berners-Lee did not invent the most sophisticated hypertext system; he invented one that was simple enough to standardize. HTTP and HTML became universal because anyone could implement them. This openness allowed the web to grow explosively, but it also meant no single company could control it. Microsoft, despite its dominance of desktop computing, could never own the web.

MCP represents a similar moment for AI. If MCP becomes the universal standard for AI-to-tool integration, it will be harder for any single AI company to lock in users through proprietary integrations. Your tools and data become portable. Switching from one AI model to another becomes easier. Competition increases, innovation accelerates, and users gain power.

The Stakes for AI Development

The emergence of MCP comes at a critical moment in AI development. The major AI labs are racing to build more capable models, investing billions in training runs and competing intensely for market share. At the same time, concerns about AI concentration and safety are growing. Regulators are paying attention. Users are wondering who will control these increasingly powerful systems.

Open standards like MCP push against concentration. If all AI models can access all tools through a common protocol, platform lock-in becomes harder to achieve. Users can choose models based on capability and price rather than which tools are available. This is good for competition and potentially good for safety, since it reduces the winner-take-all dynamics that encourage reckless racing.

But standards can also be captured. Large companies sometimes embrace open standards early, gain dominant positions, and then extend those standards in proprietary ways. “Embrace, extend, extinguish” was Microsoft’s strategy for dealing with internet standards in the 1990s. Whether MCP remains genuinely open will depend on how its governance evolves and whether the developer community can resist capture.

The Bigger Picture

MCP is easy to overlook. It is not a dramatic AI breakthrough like a model that can solve new problems or generate stunning images. It is infrastructure, plumbing, the kind of thing that works best when you do not notice it. But infrastructure shapes what can be built on top of it.

The roads and highways we built in the 20th century made suburbs and exurbs possible, reshaping how Americans live. The internet’s architecture, designed for resilience and openness, enabled social media, e-commerce, and the gig economy. The standards we adopt for AI integration will similarly constrain and enable what AI can become.

If MCP succeeds, we may look back on this period as the moment when AI stopped being a collection of isolated islands and became an interconnected system. Models that can access any tool, collaborate with any other model, and work with any data source are fundamentally more useful than models trapped in walled gardens. That usefulness will drive adoption, which will reinforce the standard, which will drive more usefulness.

The boring technology often wins. MCP is boring in the best possible way: it solves a real problem with a simple, generalizable approach. Whether it becomes the foundation of AI integration or is superseded by something better, it represents an important moment in AI’s evolution from impressive demos to practical infrastructure. The USB-C for AI might sound unglamorous, but it could be exactly what AI needs to become truly useful.